Browse our archives by topic…

Editions

.NET Development

View all (327)

.NET JsonElement and Schema Validation

Corvus.JsonSchema enables safe use of the very high performance JSON parsing offered by .NET's System.Text.Json.

ASP.NET Core + Razor + HTMX + Chart.js

How to combine ASP.NET Core, Razor, and HTMX to power interactive Chart.js visualisations.

adr - A .NET Tool for Creating & Managing Architecture Decision Records

Architectural Decision Records (ADRs) capture context, options, decisions, and consequences. dotnet-adr is a .NET tool for managing ADRs.

Analytics

View all (135)

Data is a socio-technical endeavour

Our experience shows that the the most successful data projects rely heavily on building a multi-disciplinary team.

Data and AI Engineering Maturity - Fix our problems before we hit the buffers

As data and AI become the engine of business change, we need to learn the lessons of the past to avoid expensive failures.

SQLbits 2024 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2024 - Europe's largest expert led data conference. This year SQLBits was hosted at Farnborough IECC, Hampshire.

Apprenticeship

View all (57)

Life as an Apprentice Engineer at endjin

Eli joined endjin as part of the Software Engineering Apprenticeship 2021 cohort. In this post she reflects on her first two years.

My year in industry as a whole

As Charlotte's placement comes to an end, she reflects on her year at endjin, highlighting her experiences to take back to University with her

My Year in Industry so far

Charlotte is studying for a Bachelor of Engineering - BEng (Hons), Computer Science at the University of York. She was part of our 2021 internship cohort, and is spending her Year in Industry placement with endjin too. In this post she reflects on her first 6 months.

Architecture

View all (66)

No-code/Low-code is software DIY - how do you avoid DIY disaster?

No-code/Low-code democratizes software development with little to no coding skills needed. But how do you evaluate if software DIY is the right choice for you?

Architecture Decision Records

Explore the benefits of Architecture Decision Records (ADRs) in technical design, with real-world examples applied to different scenarios.

Using Cloud CI/CD in Zero Trust Environments

Performing certain deployment operations from a cloud-based CI/CD agent against resources that are only accessible via private networking are problematic. The often-cited solution is to use your own hosted agents that are also connected to the same private networks, however, this introduces additional costs and maintenance overhead. This post discusses an approach that combines the use of cloud-based CI/CD agents with 'just-in-time' allow-listing as an alternative to operating your own private agents.

Automation

View all (6)

Power Query - Where can you use it? - Power BI

In this series of posts, we look at all the places where you can integrate Power Query as part of your data solutions. Here we look at Power BI.

Power Query - Where can you use it? - Microsoft 365

In this series of posts, we look at all the places where you can integrate Power Query as part of your data solutions. Here we look at Microsoft 365.

Using the Playwright C# SDK to automate 2FA authentication for AAD and MSA

Learn to configure AAD or MSA 2FA profiles for UI automation testing with Time-based One-Time Passwords.

Azure

View all (183)

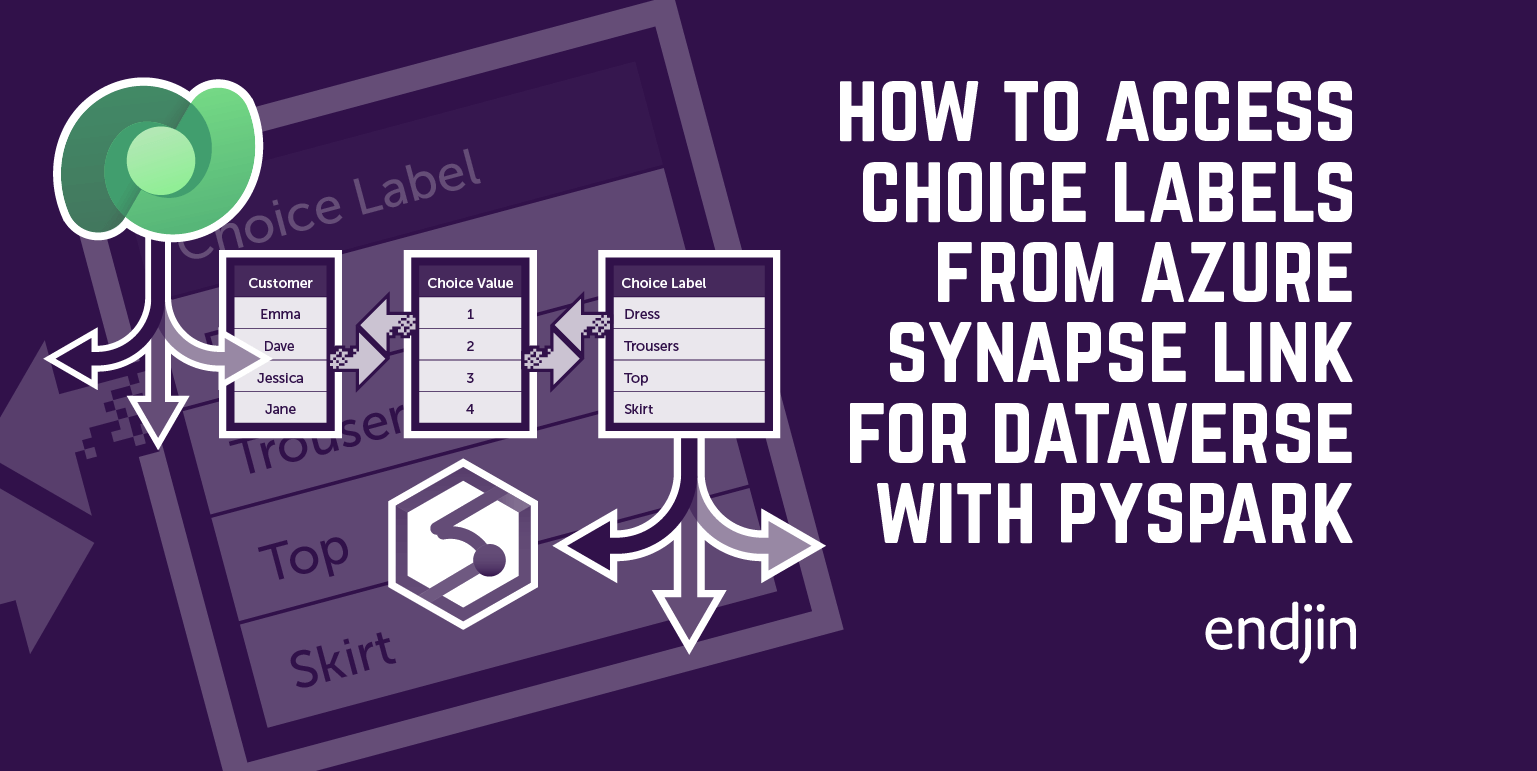

How to access multi-select choice column choice labels from Azure Synapse Link for Dataverse with PySpark or SQL

Learn how to access multi-select choice column choice labels from Azure Synapse Link for Dataverse using PySpark or SQL.

How to access choice labels from Azure Synapse Link for Dataverse with SQL

Learn how to access the choice labels from Azure Synapse Link for Dataverse using T-SQL through SQL Serverless and by using Spark SQL in a Synapse Notebook.

How to access choice labels from Azure Synapse Link for Dataverse with PySpark

Learn how to access the choice labels from Azure Synapse Link for Dataverse using PySpark.

Azure Synapse Analytics

View all (41)

How to access multi-select choice column choice labels from Azure Synapse Link for Dataverse with PySpark or SQL

Learn how to access multi-select choice column choice labels from Azure Synapse Link for Dataverse using PySpark or SQL.

Introduction to Python Logging in Synapse Notebooks

The first step on the road to implementing observability in your Python notebooks is basic logging. In this post, we look at how you can use Python's built in logging inside a Synapse notebook.

How to access choice labels from Azure Synapse Link for Dataverse with SQL

Learn how to access the choice labels from Azure Synapse Link for Dataverse using T-SQL through SQL Serverless and by using Spark SQL in a Synapse Notebook.

Big Compute

View all (27)

Azure Synapse Analytics: How serverless is replacing the data warehouse

Serverless data architectures enable leaner data insights and operations. How do you reap the rewards while avoiding the potential pitfalls?

Benchmarking Azure Synapse Analytics - SQL Serverless, using Polyglot Notebooks

New Azure Synapse Analytics service offers SQL Serverless for on-demand data lake queries. We tested its potential as a Data Lake Analytics replacement.

Does Azure Synapse Analytics spell the end for Azure Databricks?

Explore why Microsoft's new Spark offering in Azure Synapse Analytics is a game-changer for Azure Databricks investors.

Big Data

View all (96)

Data is a socio-technical endeavour

Our experience shows that the the most successful data projects rely heavily on building a multi-disciplinary team.

Data and AI Engineering Maturity - Fix our problems before we hit the buffers

As data and AI become the engine of business change, we need to learn the lessons of the past to avoid expensive failures.

SQLbits 2024 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2024 - Europe's largest expert led data conference. This year SQLBits was hosted at Farnborough IECC, Hampshire.

Culture

View all (128)

Data is a socio-technical endeavour

Our experience shows that the the most successful data projects rely heavily on building a multi-disciplinary team.

Data and AI Engineering Maturity - Fix our problems before we hit the buffers

As data and AI become the engine of business change, we need to learn the lessons of the past to avoid expensive failures.

Life as an Apprentice Engineer at endjin

Eli joined endjin as part of the Software Engineering Apprenticeship 2021 cohort. In this post she reflects on her first two years.

Data Engineering

View all (26)

Introduction to Python Logging in Synapse Notebooks

The first step on the road to implementing observability in your Python notebooks is basic logging. In this post, we look at how you can use Python's built in logging inside a Synapse notebook.

Star Schemas are fundamental to unleashing value from data in Microsoft Fabric

Ralph Kimble's 1996 Star Schema principles still apply in Cloud Native Analytics.

Adopt A Product Mindset To Maximise Value From Microsoft Fabric

In this post I describe how adopting a product mindset will help you to extract maximum value from Microsoft Fabric.

Databricks

View all (8)

Intro to Microsoft Fabric

Microsoft Fabric unifies data & analytics, building on Azure Synapse Analytics for improved data-level interoperability. Explore its offerings & pros/cons.

Version Control in Databricks

Explore how to implement source control in Databricks notebooks, promoting software engineering best practices.

How to use SQL Notebooks to access Azure Synapse SQL Pools & SQL on demand

Wishing Azure Synapse Analytics had support for SQL notebooks? Fear not, it's easy to take advantage rich interactive notebooks for SQL Pools and SQL on Demand.

Dataverse

View all (3)

How to access multi-select choice column choice labels from Azure Synapse Link for Dataverse with PySpark or SQL

Learn how to access multi-select choice column choice labels from Azure Synapse Link for Dataverse using PySpark or SQL.

How to access choice labels from Azure Synapse Link for Dataverse with SQL

Learn how to access the choice labels from Azure Synapse Link for Dataverse using T-SQL through SQL Serverless and by using Spark SQL in a Synapse Notebook.

How to access choice labels from Azure Synapse Link for Dataverse with PySpark

Learn how to access the choice labels from Azure Synapse Link for Dataverse using PySpark.

Design

View all (1)

Developing a new JSON Schema Brand and Website

Discover Paul's guide on expanding brand collateral for organizations through effective website development and design strategies.

DevOps

View all (33)

Polyglot Notebooks for Ops

Polyglot Notebooks' PowerShell support enhances IT Ops with robust, repeatable processes via 'executable documentation'.

Exploring OpenChain: From License Compliance to Security Assurance

Open-source software has become an essential part of many organisation's software supply chain, however, this poses challenges with license compliance and security assurance.

Data validation in Python: a look into Pandera and Great Expectations

Implement Python data validation with Pandera & Great Expectations in this comparison of their features and use cases.

Engineering Practices

View all (145)

adr - A .NET Tool for Creating & Managing Architecture Decision Records

Architectural Decision Records (ADRs) capture context, options, decisions, and consequences. dotnet-adr is a .NET tool for managing ADRs.

Data and AI Engineering Maturity - Fix our problems before we hit the buffers

As data and AI become the engine of business change, we need to learn the lessons of the past to avoid expensive failures.

No-code/Low-code is software DIY - how do you avoid DIY disaster?

No-code/Low-code democratizes software development with little to no coding skills needed. But how do you evaluate if software DIY is the right choice for you?

Innovation

View all (30)

How to Monetize APIs with Azure API Management

Explore monetizing APIs with our guide. We offer strategies, videos, and code via Azure API Management to fast-track your business model.

Do robots dream of counting sheep?

Some of my thoughts inspired whilst helping out on the farm over the weekend. What is the future of work given the increasing presence of machines in our day to day lives? In which situations can AI deliver greatest value? How can we ease the stress of digital transformation on people who are impacted by it?

Azure Synapse Analytics: How serverless is replacing the data warehouse

Serverless data architectures enable leaner data insights and operations. How do you reap the rewards while avoiding the potential pitfalls?

Internet of Things

View all (15)

Do robots dream of counting sheep?

Some of my thoughts inspired whilst helping out on the farm over the weekend. What is the future of work given the increasing presence of machines in our day to day lives? In which situations can AI deliver greatest value? How can we ease the stress of digital transformation on people who are impacted by it?

How to use SQL Notebooks to access Azure Synapse SQL Pools & SQL on demand

Wishing Azure Synapse Analytics had support for SQL notebooks? Fear not, it's easy to take advantage rich interactive notebooks for SQL Pools and SQL on Demand.

ArrayPool vs MemoryPool—minimizing allocations in AIS.NET

Tracking down unexpected allocations in a high-performance .NET parsing library.

Machine Learning

View all (32)

SQLbits 2024 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2024 - Europe's largest expert led data conference. This year SQLBits was hosted at Farnborough IECC, Hampshire.

SQLbits 2023 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2023 in Newport Wales, which is Europe's largest expert led data conference.

SQLbits 2022 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2022 in London, which is Europe's largest expert led data conference.

Microsoft Fabric

View all (12)

Introduction to Python Logging in Synapse Notebooks

The first step on the road to implementing observability in your Python notebooks is basic logging. In this post, we look at how you can use Python's built in logging inside a Synapse notebook.

Star Schemas are fundamental to unleashing value from data in Microsoft Fabric

Ralph Kimble's 1996 Star Schema principles still apply in Cloud Native Analytics.

Adopt A Product Mindset To Maximise Value From Microsoft Fabric

In this post I describe how adopting a product mindset will help you to extract maximum value from Microsoft Fabric.

Open Source

View all (63)

Learn Reactive Programming for FREE: Introduction to Rx.NET 2nd Edition (2024)

Learn Reactive Programming with our free book, Introduction to Rx.NET 2nd Edition (2024), available in PDF, EPUB, online, and GitHub.

Implementing the OpenChain Specification

After a year of working on implementing the OpenChain specification, this blog takes you through the processes we created to track and manage our open-source licenses

Rx.NET v6.0 Now Available

For the first time since 2020, a new release of Rx.NET is available, supporting .NET 6 and .NET 7.

OpenChain

View all (4)

Exploring OpenChain: From License Compliance to Security Assurance

Open-source software has become an essential part of many organisation's software supply chain, however, this poses challenges with license compliance and security assurance.

The OpenChain specification explained

When implementing OpenChain, understanding the specification will help guide your organisation to having processes in place to review and manage open-source software

What are the risks with open-source software?

The key risks associated with open-source software, from whether you use it minimally, to using it throughout all your systems.

Power Apps

View all (1)

Styling and Enhancing Model Driven Apps in Power Apps

Discover a concise guide on improving Model Driven Power Apps styles with step-by-step instructions for a polished user experience.

Power BI

View all (67)

How to Build Navigation into Power BI

Explore a step-by-step guide on designing a side nav in Power BI, covering form, icons, states, actions, with a view to enhancing report design & UI.

Star Schemas are fundamental to unleashing value from data in Microsoft Fabric

Ralph Kimble's 1996 Star Schema principles still apply in Cloud Native Analytics.

Microsoft Fabric: Announced

Microsoft Fabric unifies Power BI, Data Factory & Data Lake on Synapse infrastructure, reducing cost & time while enabling citizen data science.

Python

View all (17)

Introduction to Python Logging in Synapse Notebooks

The first step on the road to implementing observability in your Python notebooks is basic logging. In this post, we look at how you can use Python's built in logging inside a Synapse notebook.

Azure Synapse Analytics versus Microsoft Fabric: A Side by Side Comparison

In this Microsoft Fabric vs Synapse comparison we examine how features map from Azure Synapse to Fabric.

Data validation in Python: a look into Pandera and Great Expectations

Implement Python data validation with Pandera & Great Expectations in this comparison of their features and use cases.

Security and Compliance

View all (28)

No-code/Low-code is software DIY - how do you avoid DIY disaster?

No-code/Low-code democratizes software development with little to no coding skills needed. But how do you evaluate if software DIY is the right choice for you?

Exploring OpenChain: From License Compliance to Security Assurance

Open-source software has become an essential part of many organisation's software supply chain, however, this poses challenges with license compliance and security assurance.

The OpenChain specification explained

When implementing OpenChain, understanding the specification will help guide your organisation to having processes in place to review and manage open-source software

Serverless

View all (1)

Azure Synapse Analytics versus Microsoft Fabric: A Side by Side Comparison

In this Microsoft Fabric vs Synapse comparison we examine how features map from Azure Synapse to Fabric.

Startups

View all (15)

How to Monetize APIs with Azure API Management

Explore monetizing APIs with our guide. We offer strategies, videos, and code via Azure API Management to fast-track your business model.

10 ways working with Microsoft helped endjin grow since 2010

Microsoft recently shot a video interviewing endjin co-founder, Howard van Rooijen, and Director of Engineering, James Broome, about how Microsoft has helped endjin grow over the past decade. This posts the top 10 ways in which Microsoft helped - from providing access to valuable software and services, to opening up sales channels, to helping to navigate the minefield of UK Financial Services regulations around cloud adoption.

What makes a successful FinTech start-up?

In this post we discuss the characteristics of a great FinTech startup, and the importance of the API Economy to innovation in Financial Services.

Strategy

View all (69)

Data is a socio-technical endeavour

Our experience shows that the the most successful data projects rely heavily on building a multi-disciplinary team.

Data and AI Engineering Maturity - Fix our problems before we hit the buffers

As data and AI become the engine of business change, we need to learn the lessons of the past to avoid expensive failures.

SQLbits 2024 - The Best Bits

This is a summary of the sessions I attended at SQLbits 2024 - Europe's largest expert led data conference. This year SQLBits was hosted at Farnborough IECC, Hampshire.

UX

View all (22)

How to Build Navigation into Power BI

Explore a step-by-step guide on designing a side nav in Power BI, covering form, icons, states, actions, with a view to enhancing report design & UI.

Developing a new JSON Schema Brand and Website

Discover Paul's guide on expanding brand collateral for organizations through effective website development and design strategies.

Styling and Enhancing Model Driven Apps in Power Apps

Discover a concise guide on improving Model Driven Power Apps styles with step-by-step instructions for a polished user experience.

Visualisation

View all (15)

How to Build Navigation into Power BI

Explore a step-by-step guide on designing a side nav in Power BI, covering form, icons, states, actions, with a view to enhancing report design & UI.

Developing a new JSON Schema Brand and Website

Discover Paul's guide on expanding brand collateral for organizations through effective website development and design strategies.

Styling and Enhancing Model Driven Apps in Power Apps

Discover a concise guide on improving Model Driven Power Apps styles with step-by-step instructions for a polished user experience.